Journalism in the era of digital bullshit

Some thoughts on common issues with news coverage of social media manipulation and online deception

Fake accounts, bots, burners, troll farms, and other topics related to deceptive online activity and social media manipulation have been the subject of numerous reports in mainstream media over the last decade. Much of this coverage, however, suffers from common problems that render the resulting reporting uninformative and difficult for readers/viewers to trust. News reports often lack examples of the fake accounts and content being reported on, and are frequently predicated on unreliable or unauditable tools and methods for detecting inauthenticity. Additionally, journalists and outlets sometimes themselves become vectors for misinformation, a phenomenon that is exacerbated by a media environment which rewards the publication of exciting information over the publication of true information.

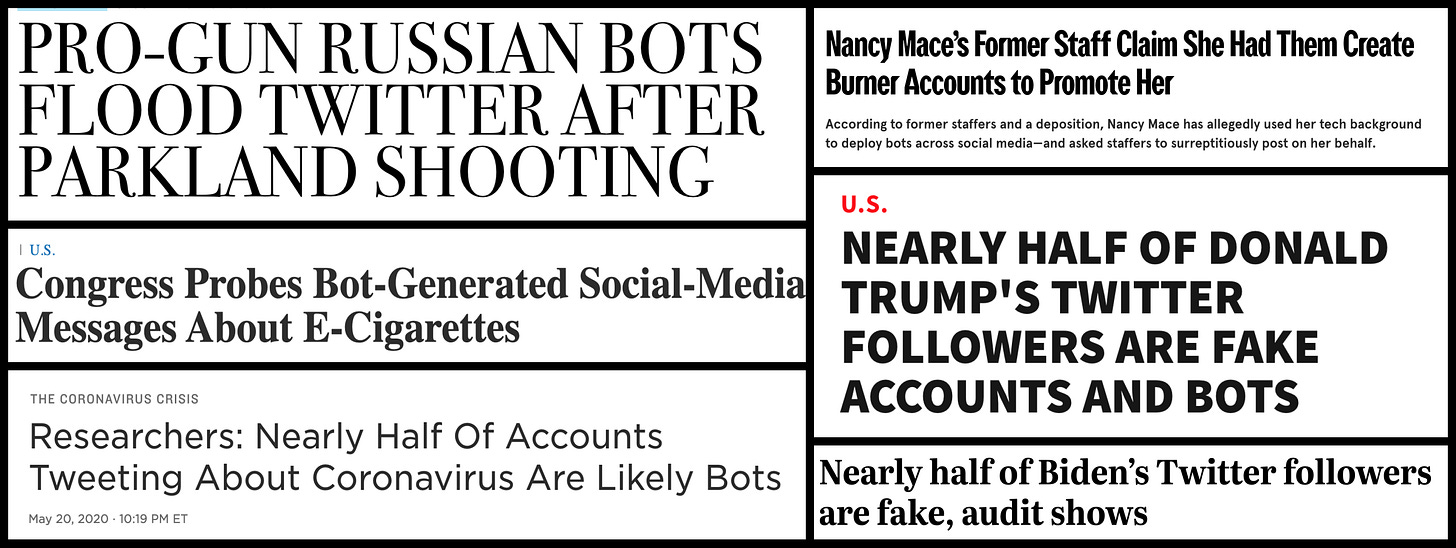

What do articles about pro-gun rights Russian bots, pro-vaping bots, massive numbers of bots tweeting about COVID-19, Nancy Mace burner accounts, fake Donald Trump followers, and fake Joe Biden followers, among many others, have in common? There are zero examples of bots, burners, or fake accounts, or any of the content they posted or reposted, anywhere in the respective articles. This is an all-too-common flaw in mainstream reporting on social media manipulation, and it renders the resulting reporting difficult to trust. When reporters fail to identify even a single example of an alleged bot or fake account in coverage of a story involving bots or fake accounts, it leaves the reader with little or no sense of what the fake accounts were doing or what was “fake” about them. Additionally, failure to include examples raises the quite reasonable suspicion that the journalist(s) reporting on the alleged fake accounts lack confidence in the identifications they have made, if any.

A related problem: In many cases, articles about bots/fake accounts that fail to include example accounts instead rely on proprietary tools with opaque algorithms, or papers based on such tools, as evidence of inauthenticity. There are multiple problems with this approach; for one, the tools used are often not intended for the purpose of finding fake accounts at all. For example, both the “Nearly half of Trump’s Twitter followers are fake” and “Nearly half of Biden’s Twitter followers are fake” articles rely on tools that tend to conflate fake followers and various types of inactive followers. For people using these tools to see how many of their followers are active, this is fine, but for determining how many of an account’s followers are fake, it isn’t particular useful. In the case of Biden and Trump, it is especially useless because the current U.S. president is frequently offered as a suggested follow to U.S. users during account creation.

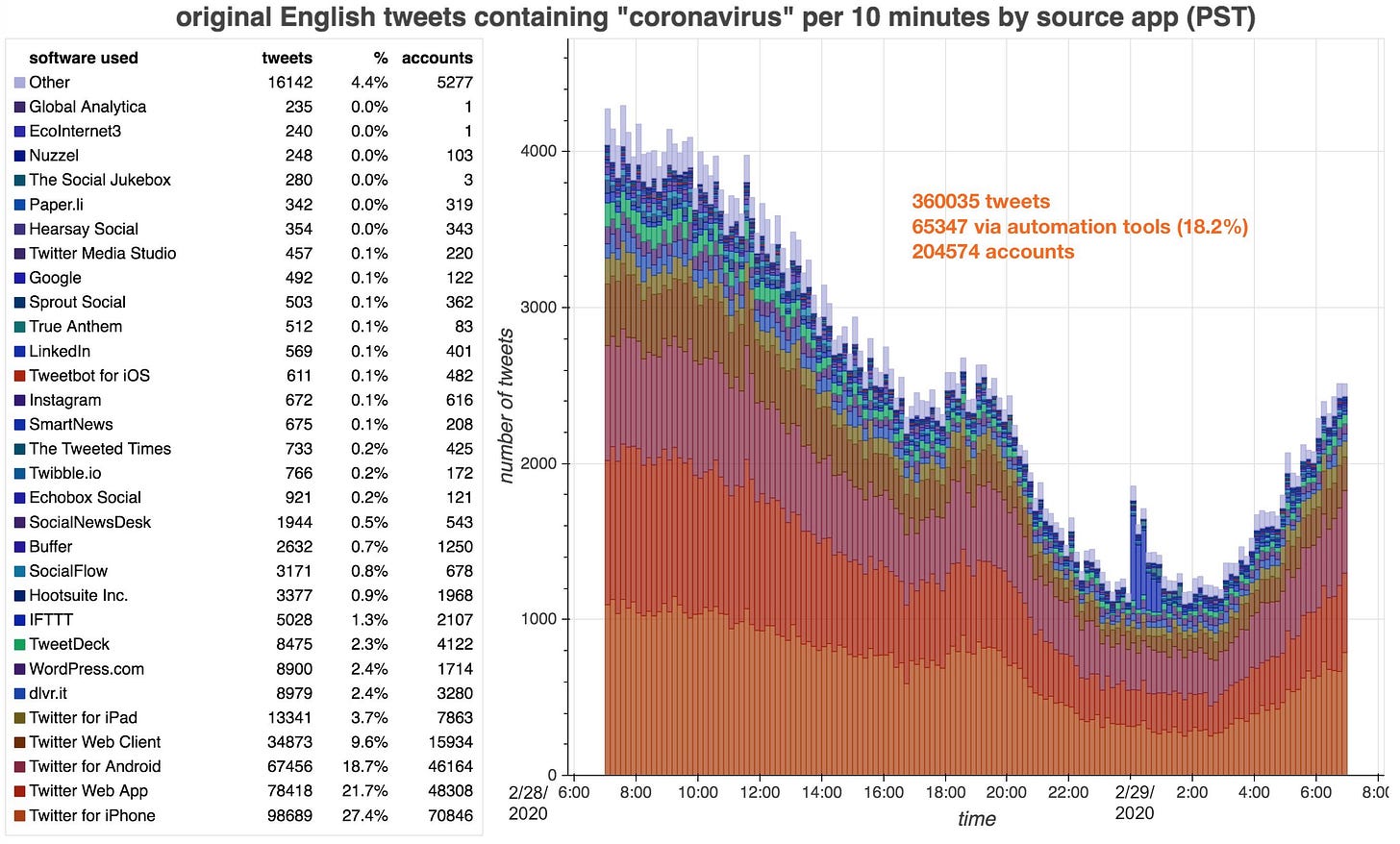

Even in cases where tools intended for detection of fake or automated accounts are employed (and work properly, which they often don’t), these tools on their own are insufficient to judge inauthentic activity without looking at context. For example, the 2020 article asserting that 45% of accounts posting about COVID-19 at the beginning of the pandemic were bots is exaggerated but broadly correct. At that time, most news feeds on Twitter were bots — automated accounts that simply tweeted out headlines and links to news stories 24/7. Since COVID-19 was the biggest news story on the planet in early 2020, these bots unsurprisingly tweeted a lot of news about COVID-19, and there was nothing particularly nefarious or interesting about it.

Journalists and news organizations are themselves targets of disinformation efforts, complicating things further. In several cases, reporters have cited false information from inauthentic accounts, rather than debunking it or scrutinizing the accounts involved. Bogus accounts operated by Russia’s Internet Research Agency were frequently quoted by US and UK media in 2016 and 2017, reporters from several outlets retweeted a fake Twitter account run by white nationalist group Patriot Front in 2021, and multiple journalists were taken in by a repurposed cryptocurrency account that plagiarized genuine reporting on the Ghislaine Maxwell trial, to name just a few examples.

In some cases, outlets have run entirely fabricated stories at the behest of disinformation purveyors, such as a 2022 piece in The Times written by a fictional Ukrainian journalism student, and spectacularly bad Daily Beast coverage from 2023 that falsely portrayed multiple left-wing social media users as pro-DeSantis fake accounts as well as describing an AI-generated face as a “sexual meme” of a real child. (The Times quickly withdrew the article in question once made aware of the problem; The Daily Beast article is, unfortunately, still online at the time of this writing.)

The rise of generative AI has accelerated these issues. Several of the incidents discussed in this article, including the Patriot Front sock account, the fake Ukrainian journalism student, and the bogus DeSantis troll army story, involved StyleGAN-generated faces in varying ways. Over the last couple years, news outlets have increasingly presented AI-generated images and videos harvested from social media as real footage of war, earthquakes, and prominent political figures, often without even being aware of it.

Ultimately, all of the above is exacerbated by an information environment driven by click and view counts, which tends to favor dramatic tabloid-style news stories over accurate investigations. Partisanship worsens the problem, as inaccurate coverage tends to get shared anyway when it aligns with the political preferences of the sharer. In order to change this, journalists and outlets covering stories of this sort should, at the minimum, get in the habit of providing examples of the fake accounts and content being covered, as well as discussing the reasoning and tools used to diagnose them as suspect. A higher dose of critical thinking regarding sensational stories on the part of both readers and reporters would also help considerably.