Graphing in the blue skies

How to generate repost network graphs from Bluesky search results

Repost network graphs can be a useful tool for studying the propagation of specific narratives and pieces of information on Twitter-esque social media platforms. Prior to the removal of the free Twitter REST API, researchers (including myself) frequently used such graphs to visualize the spread of hashtags, trending topics, links to websites, and other phenomena on that platform.

Although assembling the necessary datasets to generate repost network graphs for X/Twitter content is no longer feasible for most people, similar methods can be used to generate such diagrams for more open platforms such as Bluesky. This article describes the process of producing a repost network for Bluesky posts containing the #Caturday hashtag, but the methods used can be applied to any set of Bluesky search results.

from atproto import Client

import json

import numpy as np

import pandas as pd

import random

import requests

import time

import warnings

warnings.filterwarnings ("ignore")

def retry (method, params, retries=5):

delay = 5

while retries > 0:

try:

r = method (params)

return r

except:

print (" error, sleeping " + str (delay) + "s")

time.sleep (delay)

delay = delay * 2

retries = retries - 1

return None

def deduplicate (items, key):

keys = set ()

result = []

for item in items:

k = item[key]

if k not in keys:

result.append (item)

keys.add (k)

return result

def loop_search_posts (query, client, limit=50000):

r = retry (client.app.bsky.feed.search_posts,

{"q" : query, "limit" : 100})

if r is None:

return None

cursor = r["cursor"]

rows = []

rows.extend (r.posts)

until = None

while cursor is not None and len (rows) < limit:

time.sleep (1)

r = retry (client.app.bsky.feed.search_posts,

{"q" : query + until if until else query,

"cursor" : cursor, "limit" : 100})

cursor = r["cursor"]

rows.extend (r.posts)

if cursor is None and len (rows) < limit:

t = " until:" + rows[-1]["indexed_at"]

print (t)

if t != until:

until = t

print (until)

r = retry (client.app.bsky.feed.search_posts,

{"q" : query + until, "limit" : 100})

cursor = r["cursor"]

rows.extend (r.posts)

return [item.model_dump () for item in deduplicate (rows, "uri")]

def get_reposted_by (uri, client, limit=50000):

r = retry (client.app.bsky.feed.get_reposted_by,

{"uri" : uri, "limit" : 100})

if r is None:

return None

cursor = r["cursor"]

rows = []

rows.extend (r.reposted_by)

while cursor is not None and len (rows) < limit:

time.sleep (1)

r = retry (client.app.bsky.feed.get_reposted_by,

{"uri" : uri, "cursor" : cursor, "limit" : 100})

cursor = r["cursor"]

rows.extend (r.reposted_by)

return [item.model_dump () for item in rows]

def search_and_hydrate_reposts (query, client,

min_reposts=10, limit=50000):

posts = loop_search_posts (query, client, limit=limit)

for post in posts:

uri = post["uri"]

if post["repost_count"] >= min_reposts:

try:

reposts = get_reposted_by (uri, client)

if reposts is not None and len (reposts) > 0:

post["reposts"] = reposts

except:

print ("error fetching reposts for " + uri)

return posts

client = Client ()

client.login ("<handle>", "<password>")

results = search_and_hydrate_reposts ("#caturday", client)

with open ("caturday.json", "w") as file:

json.dump (results, file, indent=2)The search_and_hydrate_reposts function in the Python code above uses the atproto Python module to download up to 50000 recent Bluesky search results for a given query (in this case, the #Caturday hashtag) in reverse chronological order, and then downloads the set of accounts that reposted any post that was reposted at least ten times. The number of search results and minimum number of reposts can be adjusted. For the sake of keeping the source code listings in this article somewhat brief, logic to resume the download if it crashes partway through is not included, but is not difficult to implement.

def generate_edges (posts, min_reposts=10, normalize_handles=True,

node_colorizer=None, edge_colorizer=None):

rows = []

for post in posts:

if "reposts" in post and len (post["reposts"]) >= min_reposts:

uri = post["uri"]

orig = post["author"]

handle = orig["handle"]

did = orig["did"]

for repost in post["reposts"]:

rows.append ({

"uri" : uri,

"orig_did" : did,

"orig_handle" : handle,

"reposter_did" : repost["did"],

"reposter_handle" : repost["handle"]

})

edges = pd.DataFrame (rows)

g = edges.groupby (["orig_did", "orig_handle"])

nodes = pd.DataFrame ({"count" : g.size ()}).reset_index ()

nodes = nodes[["orig_did", "orig_handle", "count"]]

nodes["radius"] = np.sqrt (nodes["count"])

if node_colorizer:

nodes["color"] = nodes.apply (node_colorizer, axis=1)

df = edges.merge (nodes, on=["orig_did", "orig_handle"])

if node_colorizer:

if edge_colorizer:

df["edge_color"] = df.apply (edge_colorizer, axis=1)

else:

df["edge_color"] = df["color"]

if normalize_handles:

dids = {}

for i, r in df.iterrows ():

did = r["orig_did"]

if did not in dids:

dids[did] = r["orig_handle"]

did = r["reposter_did"]

if did not in dids:

dids[did] = r["reposter_handle"]

df["orig_handle"] = df["orig_did"].apply (lambda i: dids[i])

df["reposter_handle"] = df["reposter_did"].apply (

lambda i: dids[i])

return df

def by_repost_count (row):

green = min (row["count"] // 5, 255)

pink = hex (max (16, 255 - green))[2:]

return "#" + pink + hex (max (16, green))[2:] + pink

with open ("caturday.json", "r") as f:

data = json.load (f)

df = generate_edges (data, node_colorizer=by_repost_count)

df.to_csv ("caturday_edges.csv", index=False)In order to produce the network graph, the posts and reposts need to be transformed into a set of edges, where each node is an account and the presence of an edge connecting two accounts indicates that one account reposted the other. The generate_edges function above accomplishes this, and contains some optional logic to prevent handle changes from resulting in multiple nodes for the same account. Functions for coloring the nodes and edges can be provided if desired; the above example colors the #Caturday repost network by the number of times each account was reposted, and saves the results to a CSV file.

Although there are multiple Python libraries capable of generating network graphs, I generally use standalone open source graphing software to do so; specifically, Cytoscape, which can generate a network diagram from an imported CSV file and manipulate it in various ways. When importing the CSV file, you will need to specify which columns describe source nodes (in this case, the DID and handle of the account being reposted, along with the radius and color), which describe target nodes (the DID and handle of the account doing the reposting), and which are edge attributes (the edge color, if present).

After importing, the columns will need to be mapped to visual attributes of the graph using the node and edge style options. The screenshot above shows settings that will generally work OK, but may require tweaks from graph to graph in order for things to be readable. Cytoscape also provides multiple graph layout algorithms; the default (and the one I usually use) is the Prefuse Force Directed Layout, although the slower Edge Weighted Spring Embedded Layout is sometimes useful as well.

Here is the resulting #Caturday repost network graph. The most visible nodes represent the most frequently reposted accounts, which in this graph have been colored green. The #Caturday graph shows little in the way of organization or structure, with the vast majority of accounts grouped into a single cluster in the center of the graph. A single cluster graph of this sort is what one generally sees when the majority of the interactions are friendly amplification, and little conflict or division is present in the conversation being graphed.

When the conversation being graphed involves slightly more debate, the accounts arguing for different stances begin to group together. An example of this is recent Bluesky discussion of memes unwisely advising No Kings protesters to sit down if they encounter violence. While most of the Bluesky users discussing this had similar overall political stances and largely supported the protests, opinion was split as to whether to follow the advice in the memes. (TLDR: don’t.) The accounts promoting the memes (red) are largely grouped in one area of the graph, while the remainder of the graph (green) is composed of accounts debunking the advice in the memes. (Note that this graph was generated using the Edge Weighted Spring Embedded Layout algorithm rather than Prefuse Force Directed Layout.)

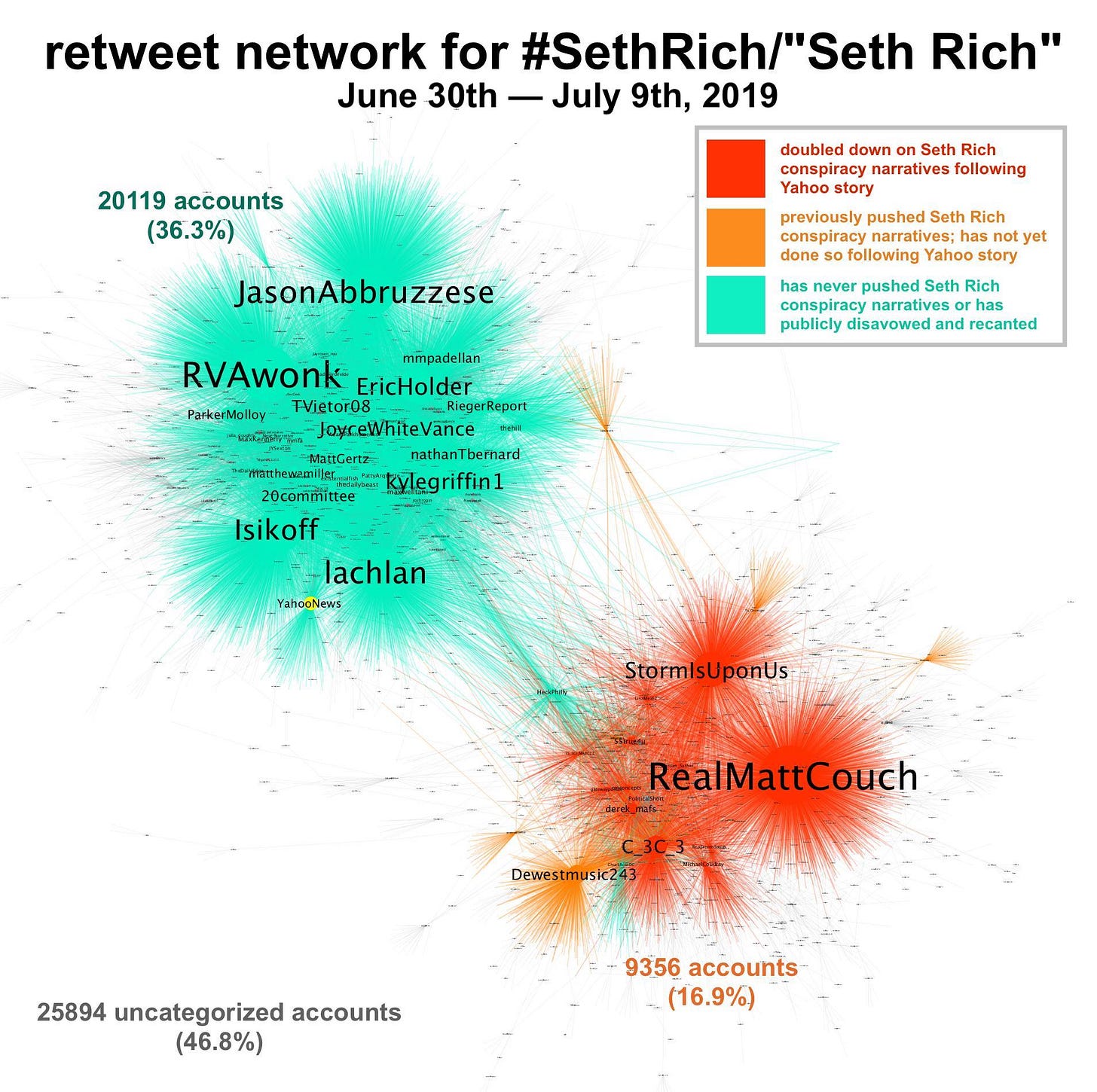

The greater the amount of data and degree of disagreement, the greater the clustering effect. A couple of older graphs from X/Twitter illustrate this nicely. The first, shown above, is a snapshot from July 2019 of discussion of conspiracy theories surrounding the 2016 murder of DNC staffer Seth Rich. In this graph, the (mostly right-wing) accounts promoting the conspiracy theories are in the orange cluster in the lower right, which is highly distinct from the turquoise cluster of (mostly left-wing) skeptics in the upper left. Graphs of this sort, where the clusters correspond strongly to major political factions, can be used to classify the partisan leanings of individual accounts with a high degree of accuracy based solely on which cluster a given account is in.

A more complex example, shown below, is a graph of Twitter “follow train” posts from late 2021 and early 2022. (Follow trains are basically just lists of accounts to follow, with the expectation that anyone on the list will follow back anyone who follows them and reposts the follow train post.) The accounts posting the follow trains form no fewer than eleven distinct clusters based on language, nation of origin, and political stance, with some degree of overlap due to cases where accounts from one cluster amplified follow trains from a different cluster.