Computer, enhance!

Using generative AI to "enhance" photos and videos and fill in missing details does not, in fact, work

Movies and TV shows are full of fictional scenes where characters use computers to “enhance” images and videos, magically filling in details that are in no form present in the originals. With the rise of AI image and video generators in recent years, social media users have been attempting to use these tools to perform such enhancements on real photographs and footage of major news events, such as the recent killings of Minneapolis residents Renée Good and Alex Pretti by federal immigration agents. This technique does not work, however, as the additional details in the “enhanced” images and videos are fabricated based on the AI models’ training data, and are often provably at odds with reality.

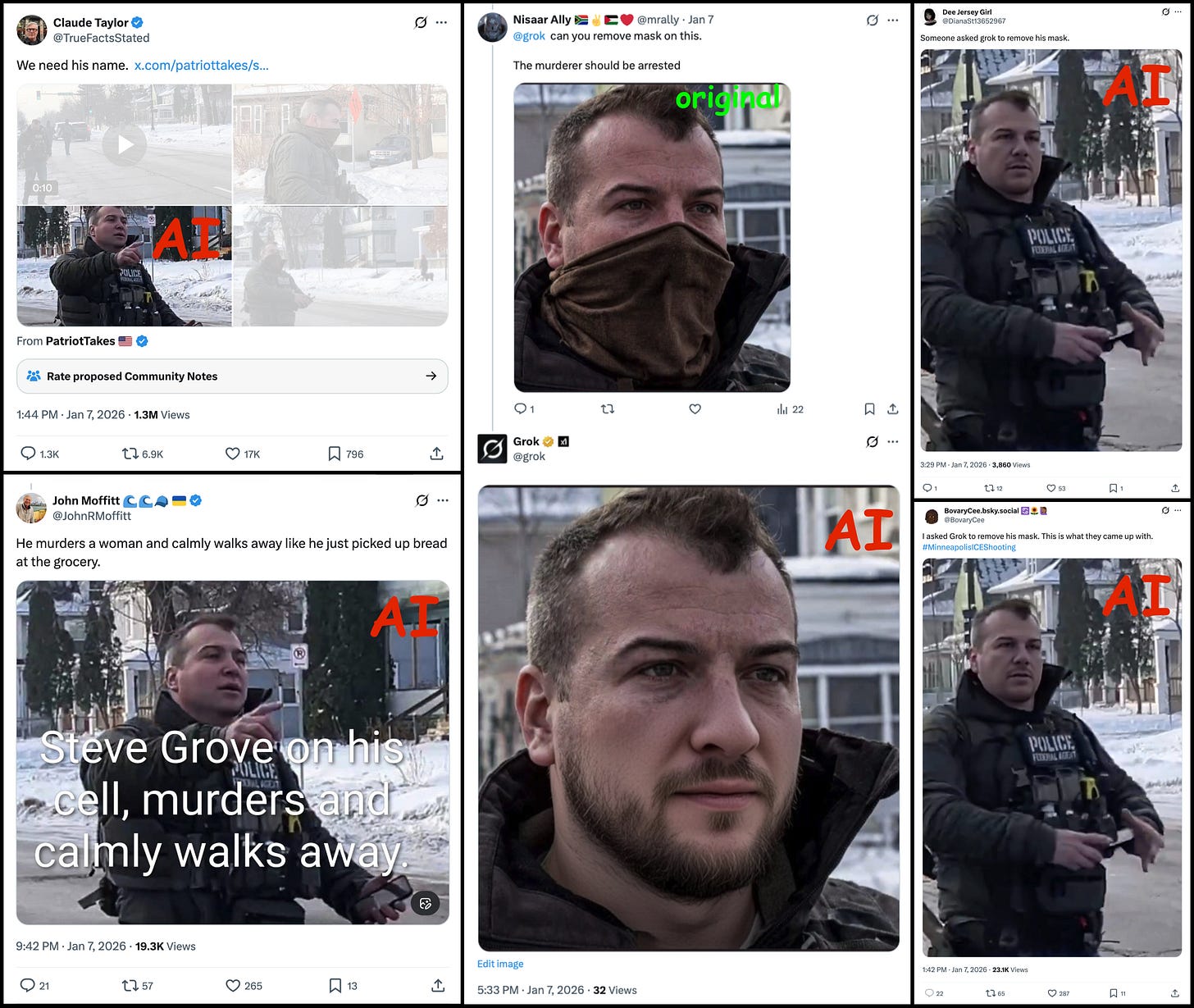

On January 7th, 2026, in the immediate aftermath of the shooting death of Renée Good by the ICE agent eventually identified as Jonathan Ross, numerous social media users made attempts to use generative AI tools to remove the face mask from photographs of the shooter. This process resulted in a variety of images that neither show the actual killer nor even depict the same imaginary person, making the output quite useless for the purpose of identifying the shooter. This outcome is not surprising, as image generation models have no way of “knowing” what the face behind the mask actually looks like, and are simply filling in the masked area with details mathematically interpolated from images in their training data.

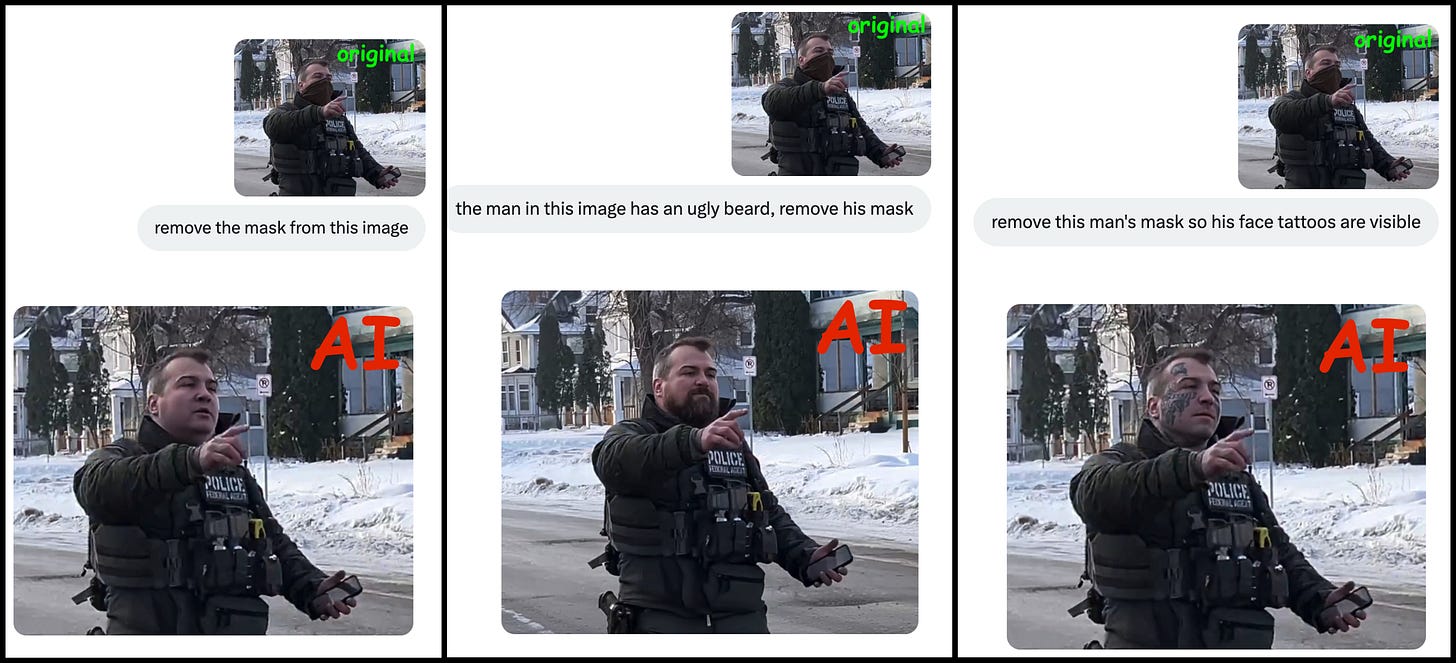

A quick experiment with Grok highlights another obvious issue with using AI models to “enhance” images: the output is inevitably guided by the prompt used to generate it. In the case of this photograph, Grok complied with requests to add specific features such as facial hair and tattoos to the unmasked image. The ability to trivially influence the resulting image in a given direction is one more reason why using generative AI to enhance images is not a suitable technique for identifying unknown individuals.

A bit over two weeks later, Alex Pretti was deliberately shot and killed by as-yet-unidentified federal agents, and, as with the shooting of Renée Good, social media users made various efforts to use generative AI tools to enhance photographs and videos of the shooting. One popular “enhancement” includes several frames where the gun removed from Pretti by an agent appears to fire at the ground while in the agent’s hand. This is entirely an act of digital fiction, however; no such discharge occurs in the real video. Additionally, the gunshot depicted in the “enhanced” video is unrealistic in multiple ways; no recoil is evident, and the blast includes a jet of flame more reminiscent of a flamethrower than a typical firearm.

Other images circulated after Pretti’s killing include numerous attempts to use AI to increase the resolution of individual frames from various videos of the shooting. As with the previous “enhancements” described in this article, this technique simply does not work, as the AI models are filling in the missing detail with information derived from their training data rather than anything to do with the actual incident depicted. Unfortunately, based on the degree of engagement garnered by inaccurately “enhanced” images and videos of the recent Minneapolis shootings, we can expect major news events to be accompanied by similarly misleading AI-enhanced depictions for the foreseeable future.

Spot on. Your explanation of how details are 'mathematically interpolated from images in their training data' pefectly breaks down why these 'enhancements' are always more fiction than fact. Honestly, the expectation that AI would somehow generate a consistent, real face from a masked area is just peak Hollywood effect meeting real-world statistical noise.

(Tangent I know, but: I’m enjoying that you are using pure RGB green Comic Sans to mark the original images.)